- Events

- Workshops and Programs

- Course Design

- Effective Teaching

- Inclusive Teaching

- Anti-racist Pedagogy: Healing from Racism Program

- Linguistically Responsive Classrooms: Instructor Series

- Book Club: Inclusive Teaching

- Multilingual TA Workshop Series

- Teaching Multilingual Students Workshop Series

- TA Development: Supporting EAL Writers in Writing Intensive Courses

- Quick Inclusive Teaching Conversations

- Accessible and Inclusive Teaching Drop-in

- Faculty Reading Circle – Wayi Wah! Indigenous Pedagogies By Jo Chrona

- Learning Technology and Media

- Online and Blended Learning

- Voice and Presentation Skills

- Graduate Student and TA Programs

- Symposium on Teaching

- Workshops and Programs

- Services

- Teaching Resources

- Course Design and Development

- Teaching Strategies

- Inclusive Teaching

- Assessment of Student Learning

- Online and Blended Learning

- Technology and Multimedia Design

- Curriculum Development and Renewal

- Teaching Assessment

- Scholarly Teaching and Inquiry

- Instructor Well-being

- New Faculty Resources

- Faculty Guide to Teaching

- Course Logistics

- Course Outlines and Syllabi

- Textbooks and Course Packs

- General Policy Information and Code of Ethics

- Exam Policies

- Grading Policies

- Accommodation at SFU - Centre for Accessible Learning

- Academic Integrity

- Protection of Privacy

- Teaching Technology Support

- SFU Teaching Context and Curriculum

- Assessing and Documenting Your Teaching

- Your Teaching Mentors: CEE and Your Faculty Teaching Fellow

- Working with Teaching Assistants (TAs)

- Graduate Supervisor Resources and Expectations

- APPENDIX: Campus Resources for You and Your Students

- Faculty Guide to Teaching

- Generative AI in teaching

- TA Support

- About Us

- Connect With Us

Generative AI in teaching

OVERVIEW

Generative artificial intelligence (AI) presents new opportunities and challenges for teaching and learning. Below, you’ll find some frequently asked questions and important considerations when engaging and experimenting with generative AI.

Like any technology, there are benefits and limitations for both instructors and students. While the rapid advancement of generative AI can make it difficult to keep up with the latest tools and types of outputs that are possible, CEE staff are well-situated to support the critical and creative uses of generative AI to enhance curriculum, courses, and assignments.

CEE offers departmental and unit sessions on the following topics:

Generative AI and Academic Integrity

Generative AI and Online Courses

Generative AI and Student Experience

Generative AI and Personal and Department Teaching Goals

Don’t see the topic you’re interested in listed? Contact us and we will work with you to develop a session that works for your teaching and learning needs.

CONSIDERATIONS

Generative AI applications are trained on different datasets to create predictive outputs (text, images, audio, and video, for example) in response to user prompts. Outputs will always reflect biases and limitations of the dataset that the application draws on. With Chat GPT, the initial training dataset included 300 billion words drawn from a variety of online texts and resources.

Some generative AI tools require users to share personal information to create accounts. User inputs entered into generative AI tools to prompt responses may be added to the training dataset for the tool, meaning users are providing information, and in some cases, their intellectual property, to a databank. Learning how, where, and why information is shared with a generative AI tool can help users make informed choices about potential use.

To learn more about SFU’s Privacy Management Program and how you can navigate appropriate use of generative AI, follow British Columbia legislation, and protect your own privacy and student privacy, visit Archives and Record Management - Freedom of Information and Protection of Privacy Act: https://www.sfu.ca/archives/fippa.html

Generative AI tools, which are increasingly embedded in search engine platforms and various software, can use significant energy. The amount of carbon dioxide generated by these tools cannot yet be fully determined, but some estimates suggest that the dataset training alone, prior to any outputs being generated, “produces 626,000 pounds of planet-warming carbon dioxide, equal to the lifetime emissions of five cars” (Martineau, 2022).

GETTING STARTED WITH GENERATIVE AI

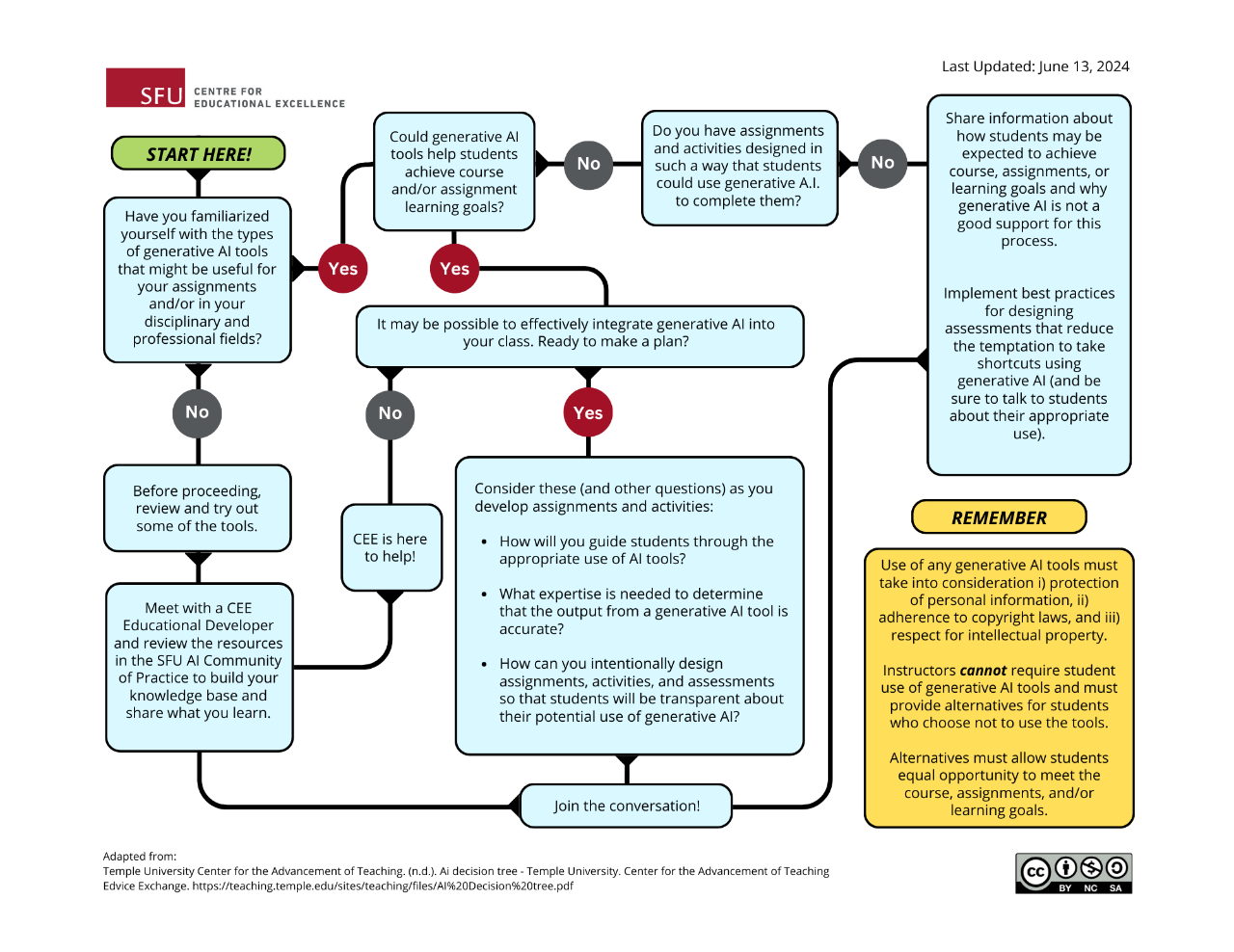

Reminder: Instructors cannot require student use of generative AI tools and must provide alternatives for students who choose not to use the tools. Alternatives must allow students equal opportunity to meet the course, assignments, and/or learning goals

GENERATIVE AI IN CURRICULUM, COURSES, AND ASSIGNMENTS

Curriculum

At the curriculum level, programs, departments, faculties, and the university work with stakeholders to develop, assess, and refine curriculum.

The Centre for Educational Excellence actively supports curriculum mapping with respect to addressing generative AI, with a focus on overall educational priorities and goals, like those outlined by Dr. Kathleen Landy (2024):

What do we want students in our academic program to know and be able to do with (or without) generative AI?

At what point in our academic program – that is, in what specific courses – will students learn these skills?

Does our academic program need a discipline-specific, program-level learning outcome about generative AI?

Courses

Permitting, or even encouraging, students to use generative AI as a tool that supports the ongoing learning and completion of assignments in a course can be clearly articulated. Creating opportunities and mechanisms for students to disclose their use of tools builds openness and transparency.

Creating scaffolded assignments where students are asked to critically analyze and reflect on the use of generative AI tools can offer opportunities that encourage students to describe more about their learning process and how they are developing skills to assess, critique, and draw conclusions based on information.

Including or refining course goals related to developing generative AI literacy can help students make explicit connections between their course work and skills that they will continue to use in their university careers and beyond.

Checking in with students about how they use generative AI can reveal critical and creative application. Dr. Zafar Adeel of the School of Sustainable Engineering notes allowing the use of generative AI tools, with attribution, helped students develop critical questions:

"I told my class they were allowed to use ChatGPT for take-home assignments and that they would not be penalized as long as they identified how they are using it. I saw a couple of trends. One was that, overall, relatively few people used ChatGPT or admitted to using it. Another trend I noticed was that there were one or two students who were using ChatGPT a lot more innovatively. They weren't just plugging in the assignment problem, and copying and pasting the answer, but they were asking more probing questions and generating more in-depth responses, which I thought was quite interesting".

Computer Science instructor and AI researcher Parsa Rajabi shares his philosophy regarding student use of generative AI in his course syllabus:

"I view AI tools as a powerful resource that you can learn to embrace.The goal is to develop your resilience to automation, as these tools will become increasingly prevalent in the future. By incorporating these tools into your work process, you will be able to focus on skills that will remain relevant despite the rise of automation. Furthermore, I believe that these tools can be beneficial for students that consider English as their second [language] and those who have been disadvantaged, allowing them to express their ideas in a more articulate and efficient manner".

Assignments

As with any assignment design, choosing to incorporate or prohibit use of generative AI in an assignment should begin with careful consideration of the course and educational goals.

Demonstrating ethical and effective use of generative AI tools as they specifically relate to assignments can help students make progress towards learning goals and allow them to develop critical thinking skills as they create and refine prompts for LLMs (large language models) like Chat GPT

Dr. Leanne Ramer, SFU senior lecturer in the department of Biomedical Physiology and Kinesiology, describes how she uses generative AI as a starting point for students working on research assignments and demonstrate her process as a researcher. Read more here

"I show students how to use [ChatGPT] to generate ideas for research papers. It can provide a very high-level summary of a field and help us draft a strategic search in an academic database. We experiment with asking the right questions and using the right prompts – and this is crucial: leveraging AI effectively is a craft that students need to hone".

ChatGPT can also be used to help students practise meta-cognition and learn how to learn. As apprentices in their disciplines, students rely on faculty to make their strategies and thinking for tackling content explicit, and [generative AI] can be leveraged in this process.

Geography lecturer, Dr. Leanne Roderick describes how she designed an assignment that offered students the opportunity integrate generative AI into their assignment:

"I provided my students with a generative AI assignment option. In the assignment, I provided the prompt and their task was to improve the content so that it more accurately reflected the course material and demonstrate to me what strategies they used to do so. They were still working with the content, so their learning outcomes were the same—it was just a different route to them. In fact, some of the students said they wished they would have just answered the questions themselves because that would been easier".

Contact CEE today to learn more about how we can support your work with generative AI in teaching and learning.

REFERENCES

- Landy, K. (2024, February 28). The next step in Higher Ed’s approach to ai. Inside Higher Ed | Higher Education News, Events and Jobs. https://www.insidehighered.com/opinion/views/2024/02/28/next-step-higher-eds-approach-ai-opinion

- Martineau, K. (2022, August 7). Shrinking deep learning’s carbon footprint. MIT News | Massachusetts Institute of Technology. https://news.mit.edu/2020/shrinking-deep-learning-carbon-footprint-0807

- Mollick, Ethan R. and Mollick, Lilach, Assigning AI: Seven Approaches for Students, with Prompts (September 23, 2023). Available at SSRN: https://ssrn.com/abstract=4475995 or http://dx.doi.org/10.2139/ssrn.4475995

- Pasick, A. (2023, March 27). Artificial Intelligence Glossary: Neural Networks and other terms explained. The New York Times. https://www.nytimes.com/article/ai-artificial-intelligence-glossary.html

- Satia, A., Verkoeyen, S., Kehoe, J., Mordell, D., Allard, E., & Aspenlieder, E. (2023). What is Generative Ai?. Generative Artificial Intelligence in Teaching and Learning at McMaster University. https://ecampusontario.pressbooks.pub/mcmasterteachgenerativeai/part/a-brief-introduction-to-generative-ai/

- University of Oxford. (n.d.). Use of generative AI tools to support learning. https://www.ox.ac.uk/students/academic/guidance/skills/ai-study#:~:text=Generative%20AI%20tools%20can%20be,through%20teaching%20and%20independent%20learning