Early Concept

As an early concept of our final product, we intended to create a visualization in the form of waves as it depicts the input of the user’s voice more accurately, but eventually settled on a more artistic visualization of an ‘eye’ in a way that these audio inputs would be displayed in a circle which resembled an eye.

Explanation of the interaction

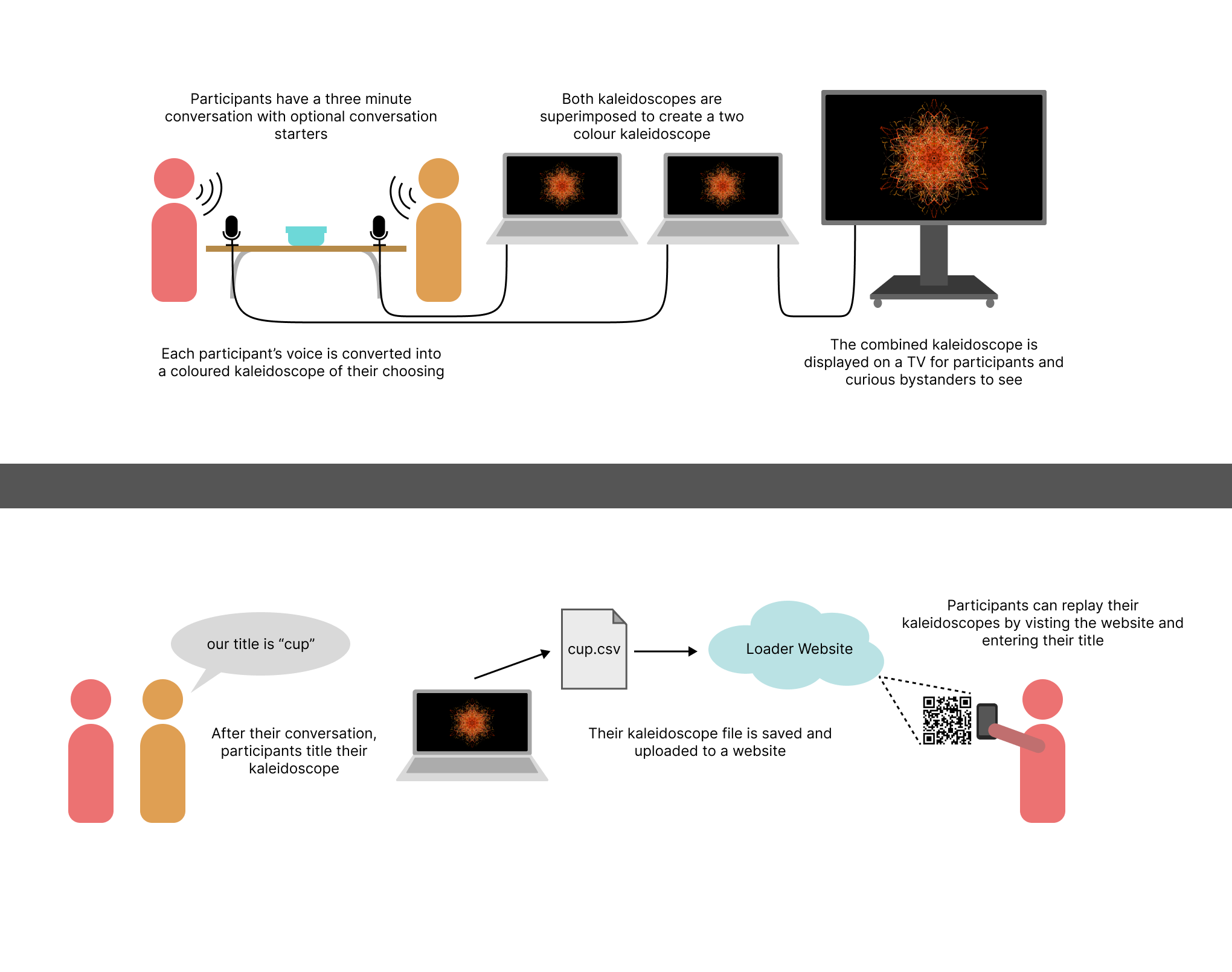

Participants are seated at a round table with two microphones where they have a three minute

conversation. Before talking, the participants are asked to pick a color that will represent their

voice, allowing for personal agency in the final outcome. In case participants struggle to hold a

conversation, there is a container of written prompts to set the conversation topic. The ability to

physically pick a random prompt is intentional because it gives participants a sense of control amidst

the randomness.

When a participant speaks, their voice draws kaleidoscope strokes on a blank canvas

which is displayed on a TV. As the conversation progresses, older strokes decay to avoid overcrowding.

Once the conversation has ended, participants are asked to title their kaleidoscope. They can scan a QR

code to view their kaleidoscope replay on our website by entering their kaleidoscope title. On the

website, there is an option to view the replay without decay to visualize the entire conversation.

Development

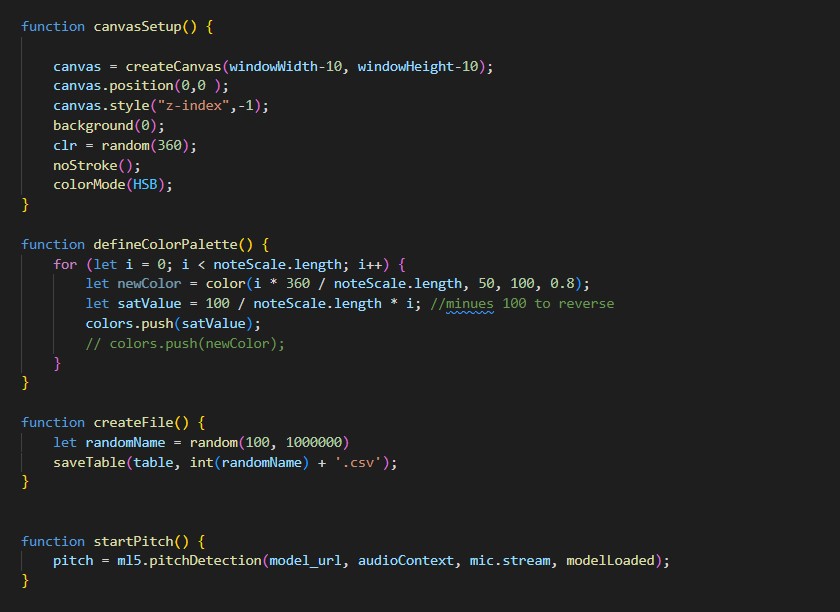

The main concepts of the code such as the kaleidoscope and use of web sockets were adapted from Daniel

Shiffman’s coding train series. These parts of the code were then modified to fit the theme of our

project. p5js, the JavaScript framework used, includes a sound library that we used to take input from

the user. Once we were able to receive input from the user we could assign it to a variable and use it

to modify the parameters of the kaleidoscope.

To record the participant's pitch a library called ml5 was

used. This pitch data was also used to modify the kaleidoscope. These elements comprised the main body

of our code. Although we were able to produce interesting outputs with this code, the final results held

no meaning to the participant's. To fix this issue we added a particle class which allowed us to give

each stroke its own life expectancy, in doing so we were now able to fade/remove particles after their

death, this new functionality let participants observe when a complete thought was communicated.

Next,

to allow participants to differentiate between their voices, we added the ability to connect two

computers to a server and draw different coloured kaleidoscopes for each user. To enable this we needed

to use web sockets and a server to transmit data from one program to the other using Web Sockets with

the library Sockets.io.

The first time we tested Sockets

Daisy Loader

To allow users to go back and watch their conversation, all the data during their conversation was recorded and exported to a CSV file. A new program was created from scratch by reverse-engineering the original code. We named this new program “Daisy Loader” and made it available to participants on a website. The exported CSV files were named according to the participants liking and added to an assets folder inside the Daisy Loader program. The Daisy loader accepts a string from a textbox and searches the assets folder to find a corresponding CSV file. If found the program then replays the conversation for viewers to watch.