Toru Iwatake interviewed Barry Truax on August 7, 1991 at the Department of Communication, Simon Fraser University. Published version: "Interview with Barry Truax," Computer Music Journal, 18(3), 1994, 17-24. An Italian translation is available here (grazie a Ahsan Soomro).

See also the interview with Paula Gordon including RealAudio clips on the Paula Gordon Show.

You can also read a more extensive interview with Michael Karman and reviews on the Asymmetry website (posted Feb. 10/09)

An interview with Raquel Castro in Lisbon in 2017, published in Lisboa Soa (2016-2020), Sound Art, Ecology and Auditory Culture

An early interview (late 1970s) with Norma Beecroft, published in Conversations with Post World War II Pioneers of Electronic Music, Canadian Music Centre, 2015

A three-part interview with Prof. Jan Marontate in the Sonic Research Studio, SFU, July 7, 2012 (original video on the WSP Database) Part 1, Part 2, Part 3

plus other radio and video interviews listed here.

Early Musical Experience

Iwatake: As a child, how did you get interested in music?

Truax: Well, I think that music was simply a part of our family life. I was raised by my grandmother and she played very ordinary piano, but my father who was and is a very good musician, a percussionist, had learned to play the xylophone, and then later had acquired a marimba, a very beautiful rosewood key Deagan marimba (whose sounds I used in my piece Nightwatch) and he played that all through his life. So I grew up with the sound of the piano and the sound of this beautiful instrument, the marimba, and I didn't realize how unusual that was. I remember when I was very young not being able to reach the keys of the marimba and having to stand up on a little stool...

Iwatake: And how old were you then?

Truax: Probably four or five years old. But anyway, these two instruments were there but my parents and grandparents didn't think that the marimba was a very suitable instrument, it wasn't very practical, so they sent me off to have piano lessons. Which was fine. So at a pretty young age I started playing the piano and it has accompanied me ever since as a wonderful instrument to play. And then when I went on to university I was more or less a typical product of the 1960s - that if you were young, male, and bright, you were encouraged to go into science. So I decided to take my undergraduate studies at Queen's University in physics and mathematics. Music and art was my hobby and my greatest passion and interest, but there was a very clear distinction going on there. But the summer of 1968 was the turning point. It maybe had been prepared a little bit by the summer of 1967 which I spent in Montreal, during Canada's Expo 67, our centennial year, and there I was exposed to an incredible amount of music and art from all around the world, and I also encountered my first synthesizer, because one of the pavilions there, the Jeunesse Musicale pavilion had a synthesizer that I later realized was from the University of Toronto studio [designed by Hugh LeCaine]. I remember spending an afternoon there in May of 1967 playing around, trying to figure out this synthesizer, and I probably heard some electronic music too because there was a lot of music at Expo 67.

Then in the summer of '68 I was working at Queen's University in the physics department and during lunch hours and off hours, I started to do something on the piano that might be called composition. And that was the first time that I really felt creatively involved in music. And at the same time I found that working in science, I could do it intellectually but I had no real passionate interest. So there was a crisis at this point. When I went into my last year of the honours degree in science, the problem was there. I was able to get good grades, but then, was I going to go on to graduate school and if so in what area? Well the closest I could come to generating any enthusiasm in science was radio astronomy. And so I said that that was what I was going to do, and in fact I even got a scholarship to do that. But at the same time I was applying for acceptance into a music program and it came down to a choice between McGill University where they wanted me to do a very traditional type of music program, and the University of British Columbia who were a bit more liberal about it. To make a long story short, I completed the science degree and came out to the University of British Columbia in 1969, walked into the electronic music studio at UBC and in a certain sense, never came out. Because as soon as I found myself in an electronic music studio - and in those days that meant analog voltage control, Buchla, Moog, tape recorders - the marriage of music and technology suited me absolutely perfectly. So I abandoned the radio astronomy...

Iwatake: Good for you...

Truax: It was great. I only go into that level of detail, just to show you how far we've come, 20 years later. In the 1960s it was not, in Canada anyway, regarded as a normal thing to do. There were no courses. If you were lucky maybe you could find a small studio and maybe one professor at a university level who would say you can start here, but you were starting at a graduate level. And now, we're trying to keep up with the kids in high school who are already into it. So there is a big difference.

Iwatake: I want to know if you ever composed any instrumental pieces or not?

Truax: I did at UBC, because the piano was all that I knew, and this piano sonata that I had started as a refugee from the physics department at Queen's University, that became my first composition. There were two piano pieces that I did and a few other small instrumental things, but it was clearly not my direction. It was the bridge to the electronic music studio, because I can now look back and say that was the first interactive composition. That's what I've done since with the computer, interactive composition where the sound is very important for me as the feedback in the compositional process. The only version of that that I had when I started was the piano. And I immediately gravitated to algorithmic processes, not necessarily serial composition, but things that would use some kind of system. The first instrumental work that I did was based on dividing the 12 tones into two hexachords. I was very interested in permutations but yet getting something musical out of that system of organization.

Institute of Sonology, Utrecht

After UBC I pursued studies at the Institute of Sonology in Utrecht with Koenig and Laske and those studies ended with my first computer composition and I think it's quite significant how it came about. John Chowning made a visit in early 1973 and I believe he played us Turenas. I was in the computer room afterwards and he said, "Well, show me what you're doing." And I showed him my first little crude attempts at sound synthesis with fixed waveforms and amplitude modulation and he basically said, there's a better way, it's called FM. And he sketched out for me the principles of frequency modulation, and within the next two months, I realized an algorithm for doing FM in real time. Last year when they were doing an historical survey at Bourges where Chowning spoke and I asked him afterwards, "Was my little implementation of FM in 1973 at Utrecht, was that in fact the first real time FM?" And he said, yes it probably was. I never realized that. It was so new, even for him, but it provided exactly the kind of richness of sound that was lacking in the regular wavetable type of synthesis. So that was a turning point for me with the computer, and my first computer composition was done using FM sound in 1973.

Barry at the console of the quad mixing studio, Institute of Sonology, ca. 1973

The other influence was when I visited the Stockholm electronic music studio in 1971 and '72 and met Knut Wiggen, the first director, and he had a tremendous impact on me at that point, by showing me how necessary the computer was for musical thought. He just said basically, you need the computer. I think now that what he meant was that new concepts of music require us to go beyond all of the old instrumental music habits we carry around inside us, and we need some form of external intelligence to handle the processes we're opening ourselves up to. But at the time I was just thunderstruck - I can still see him standing there, in the huge control room of the old EMS, with its white walls and futuristic console, quietly imagining a whole new musical world. I've always regretted that when he left EMS to retire in Norway he completely dropped out of sight and no one now has heard any of the beautiful pieces he did there - perhaps they'll be rediscovered some day. Anyway, that was the critical point where before I'd worked entirely in the analog voltage controlled studio and I discovered that to finish this composition, a large music theatre piece called Gilgamesh, one tape solo remained to be done, and it was at that point with the FM sound, I needed the computer and the algorithmic processes to control the complexity of it. So Wiggen was right, what hooked me on the computer was the point where I needed it compositionally to do something that I literally couldn't imagine doing in any other way.

World Soundscape Project and Acoustic Communication

I spent two years at the Institute of Sonology and then wanted to come back to Canada. I had met Murray Schafer in Vancouver, when I was a graduate student here and had been very interested in his work as a composer, and so I wrote a letter to Simon Fraser University, and the letter arrived on Murray Schafer's desk. He wrote back and said that he was founding this project called the World Soundscape Project and he invited me to come and work with them.

Iwatake: This was when?

Truax: 1973. And this to me was a breath of fresh air, because in Utrecht, working on the computer and in the studios in the middle of an extremely noisy European city, the contrast between the refinement of sound, all of the abstract thinking that we were doing in the studio and how crude the sound was in the actual center of the city, was to me pretty shocking. And here was somebody who was cutting through that and saying we should be not just in the studio, we should be educating the ears of everyone who experiences the impact of noise. So I came back and worked with Schafer who had started teaching in this new Department of Communication, with the World Soundscape Project, and that adds the other element that's been very important for me, the interest in environmental sound. So at the same time, I was working with him and trying to set up a computer music facility on anybody's mini-computer on campus here who would let me use it in the off hours.

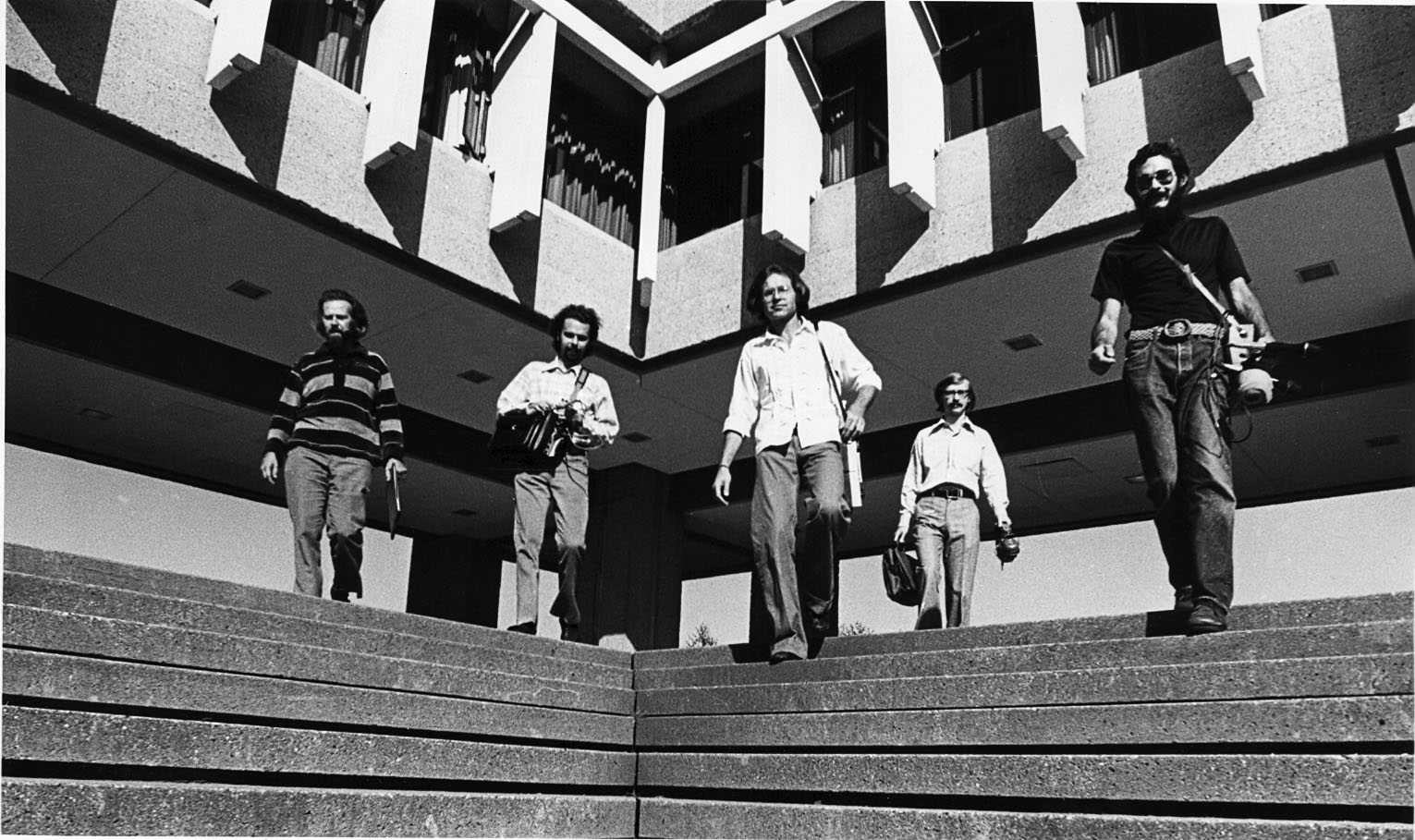

World Soundscape Project team at SFU in 1973. Left to right: R. Murray Schafer, Bruce Davis, Peter Huse, Barry Truax, Howard Broomfield.

So now I am Murray Schafer's successor here and I've established a program in acoustic communication and we've also established an interdisciplinary program in contemporary music in the Centre for the Arts that has a very strong emphasis on computer music, and now I do both, acoustic and electroacoustic communication, the social science aspect, the impact of sound and technology in the environment and media, and computer music in terms of synthesis and composition.

Iwatake: I think that that makes you a very unique composer in the world. Do you find any other colleagues like yourself?

Truax: Unfortunately people in computer music do not seem to have the same kind of broader concerns for social issues or the media, or if they do they don't see these as related to their professional work. For the most part composers seem wedded to abstract music, despite the fact that this limits their audience and places them on the fringes of the culture. Their work doesn't influence the environment and they don't let the environment influence their music. Then they wonder why no one pays much attention! The only alternative seems to be commercial music and those who "cross over" are immediately suspect, though perhaps secretly envied. The great irony is that society is in desperate need of the kind of aural sensibility associated with music, particularly in the soundscape and the humane use of technology, but the composers are busy with their esoteric concerns that answer neither society's practical needs nor its search for meaning. Of course to change that, we'd have to broaden the notion of what being a composer means and what constitutes serious music. That's not likely to happen within the traditional music schools.

The divisions are fairly traditional and deep, and this kind of cross-disciplinary research, which has been very much encouraged at Simon Fraser University, involves the kind of breaking down of disciplinary barriers that is absolutely necessary because the old traditional disciplines just cannot answer the kinds of questions we face today. The same kind of technology that we're using for computer music is being used for pop music, broadcasting and advertising, so why just study it from a purely musical point of view? Our students find that they are more qualified if they can bridge these kinds of barriers, because very few people make their living just in music, and even fewer at contemporary music. On the other hand, there's a crying need for artistic sensibility applied to the environment, applied to the media, applied to education. And yet the schools are still training people in very traditional ways and wondering why they can't find jobs.

Computers & Environmental Sound Composition

Iwatake: So you are linking computer music to the sound environment?

Truax: Well, actually until recently they have been quite separate in my compositional work, or at least in what I teach. In many ways I have been working toward a re-integration of those ideas in the last year or two, but they were fairly separate when I arrived. Teaching the broader range of students we have here in Communication, though, is a wonderful way to apply my experience with sound and technology, encouraging them to listen more carefully and study the role sound has in their lives, and to use technology less manipulatively and more creatively as a tool for discovery.

Iwatake: What do you find important about computers?

Truax: Well I think in keeping with the background I've given you, you can see that there are two directions that I'm interested in. The first is the incredible importance of the computer to be able to deal directly with sound. I'm a composer whose music finds its basis, its inspiration, its whole direction, even its structure, in sound. I have to be able to hear it. I do respect abstract thinking and structuring and because of my interest in algorithmic processes I have a great interest in that, but the sound is the basis of everything, and that means being able to design the sound directly. First of all it was with FM, where you have the richness of the dynamic quality of sound that is somewhat similar to environmental sound, but yet you can go in through software control and get at the micro level of it. There's a whole trajectory, you might say, of my work, first with FM sound, then leading to granular sound that emphasizes its richness and dynamic quality, but also the micro level design of it, and on to the granulation of acoustic sound where the task is more to reveal its inner complexity. So that is incredibly important and of course today it's a big issue, because we still are faced with that problem in the MIDI-controlled synthesizer. It's rather deficient in allowing you access to the micro level, and of course now we are all hoping that digital signal processing will introduce that possibility. I was fortunate in 1982 to be able to acquire Digital Music Systems' DMX-1000 signal processor, which is an early form of real-time DSP. I've been working ever since at that level of software, micro-programming of the sound, and that's what allowed me to get into granular synthesis.

The other aspect is the computer as an aid to compositional thinking and you can see from what I've said before about algorithmic and stochastic composition that this has always been very important to me. I remember when I first encountered computer music I had two objections: one I didn't like the sounds, and I've described the things that I think have solved that problem, and second in terms of the compositional process, I thought very naively, as a student then, why use a computer if it only produces what you tell it to produce? If you have to write a score into the computer and have to specify to the last minuscule detail all parameters, why would you do it other than maybe it was easier than getting an orchestra to do it for you, something like that. But this is where Koenig and Wiggen made me realize that the computer could do more than just what you told it to do. And that is a paradox, because how can it? Well, for example, top down processes. First of all they change the process of compositional thinking.

As soon as you start using programs to replicate part of the organizational process, things start happening. It starts changing the way you think about sound and its organization. And that's the point where the computer becomes indispensable. That's the key. The whole evolution of my PODX system, as it's now called, has been as the result of compositional thinking and the way the computer and programming and sound generation lead to new ideas about sound and its structure, and in fact, those two streams are coming closer and closer together. What used to be a strict division between score and orchestra, sound object and compositional score file, these are coming closer and closer together in terms of the way I'm programming, and now with granular synthesis they're becoming virtually indistinguishable. Sound and structure are being united and you cannot describe one except in terms of the other.

We're talking about the computer as an aid to compositional thinking - it really becomes a framework for thinking. But how do you set up a studio such that it can evolve according to composers' needs, as opposed to being directed by only technical needs? It's very hard to have the sophisticated technology which we feel that we need today, and yet make it responsive to composers' ideas, open ended, as opposed to closed. It's very difficult to achieve that nowadays in a computer studio.

Iwatake: But doesn't your software reflect only your own thinking, your own personal development path?

Truax: That has been everybody's fear about more powerful computer music languages and there's a fundamental problem that when music and technology meet, the technologists are trained to be general purpose. They want to be neutral, more objective, they want to do things in a modular fashion where everything is possible so they do not prejudice the system, but they also do not "prejudice" the system with any musical knowledge. And this to me is the fundamental problem of computer software particularly when it's designed according to computer science principles. How do you put the intelligence into it, because they regard that as prejudicing the system, making it more idiosyncratic. And they fear that. It's a fundamental problem that has not been solved.

Now there's some AI work in creating expert systems. But it's not quite clear whether that's the way to go, still it's one way to do it. But the problem from a composer's point of view in using a general-purpose system like Music V or Cmusic or Csound is that you have to reconstruct the musical knowledge in the computer's terms, and very few composers know how to make that translation. They're not trained to think along those lines. So how my system differs is that first of all, there is a general-purpose level, which has been used by at least two dozen composers with a wide range of stylistic approaches. But there's also very strong procedures, strong musical models that also can be used by a wide variety of composers, maybe not by absolutely everyone, no one program can. But that's the beauty of the computer, you can have more than one piece of software and more than one approach. You don't have to have one system that embodies everything.

So I still feel very strongly that you need to incorporate musical knowledge if only to stimulate the composer's imagination. Because composers may not be very good at saying explicitly what they want, in a language that is programmable, because they're not trained to do that. But they are very good at recognizing raw material, ideas that are beginning, that are rough, and that inspire their musical thinking and they'll say, oh, I could do that with it. Now the trick is, to get enough technical facility so that you can produce something that will stimulate the composer's imagination and then to funnel those directions back into the software development. So that software development isn't just somebody's abstract idea of how to put together a signal processing based system, but it's a process to funnel musical ideas back into software development.

Granular Synthesis

Iwatake: Then perhaps I want to hear about granular synthesis.

Truax: OK. Let's finish off with that. It seemed in 1986 that there was an abrupt transition for me. It was almost as if I woke up one morning in March 1986 and wrote a granular synthesis program, out of the blue. In retrospect I see now that it wasn't that abrupt, it was a logical progression from my previous work with FM which had gone from a mapping of timbre onto a frequency-time field, through to constructing complex FM timbres from increasingly simpler and smaller components. By 1985 in Solar Ellipse those components had crossed the 50 millisecond threshold and even had the linear three-part envelope I now use for grains, but then it was for a different reason, namely to construct smooth, continuous sounding spatial trajectories.

However, granular synthesis is a completely different concept of what sound is, what music is, what composition is. First of all, it's a very different idea about what sound is because it takes a model that goes back to at least the late 1940s, with Dennis Gabor, the British-Hungarian physicist, who proposed the idea of the quantum of sound that is a small event in frequency and in time, as opposed to the dominant, mainstream Fourier model that constructs sound in frequency space and does not have any reference to time at its fundamental level. Time is added later, with much difficulty, but the basic idea of additive synthesis and the Fourier model arrives at the fixed waveform, and/or harmonic envelopes on each one of the oscillators. And basically ever since that we have been fighting with the unnaturalness of the Fourier model, all because of its difficulty of dealing with time dependence.

It's interesting sociologically, why one theory would be mainstream and come down to us in terms of oscillators, particularly sine wave oscillators. If you remember the first time you heard a sine wave oscillator your ears should have told you this is not a good sound, this is not an interesting musical sound. But we've put up with it for many years, decades, because we believed this theory was the only basis, and then it got instantiated in the computer with wavetable synthesis, and now through digital oscillators, and MIDI-controlled synthesizers, etc. Despite the smugness I detect when some people claim that we can produce any sound we want, I think we still have the same kind of problem at the synthesis level.

Interestingly enough, from the 1940s onward, there was a small other stream off on the periphery that said we should construct everything on the basis of an event, some people call it a grain, others call it a wavelet, but it's a model of frequency and time, linking them together right at the basic level. And theoretically this has been shown, in the meantime, to be as powerful as Fourier synthesis at reconstructing acoustic reality. Recent work in Marseilles by Richard Kronland-Martinet has actually realized that, not just in theory but in practice, where he's done an analysis and re-synthesis using what he calls the wavelet transform.

Now my approach has been a little bit different, there's research involved but it's been to develop a tool for synthesis and composition. And if I have any little claim to fame here, it's that in 1986 I was the first person to realize this technique in real time. It's interesting because it was technically possible long before 1986. Why did it take so long for somebody with modest means, our PDP and DMX-1000, to do this, when it was technically feasible earlier? Maybe because it really changes the way you think about sound. Before that granular synthesis was a textbook synthesis method, and the difficulty was that it was so data intensive. You had to produce hundreds if not thousands of events per second of very small micro-level events called grains.

Iwatake: How long does this last, each grain?

Truax: Anywhere from let's say 10 to 50 milliseconds and there's a lot of interesting psychoacoustics at that level because that's where sound fuses together as opposed to being distinct events. So both technically, in terms of data, and conceptually, because it departs from the Fourier model, it just hadn't been done before in real time. Curtis Roads had done it in non-real time, heroically, hundreds of hours of calculation time on mainframes, just to get a few seconds of sound. He had done that, but it remained a textbook case. As soon as I started working with it in real time and heard the sound, it was rich, it was appealing to the ear, immediately, even with just sine waves as the grains. Suddenly they came to life. They had a sense of what I now call volume, as opposed to loudness. They had a sense of magnitude, of size, of weight, just like sounds in the environment do. And it's not I think coincidental that the first piece I did in 1986 called Riverrun, which was the first piece realized entirely with real-time granular synthesis, modelled itself, as the title suggests, on the flow of a river from the smallest droplets or grains, to the magnificence, particularly in British Columbia, of rivers that are sometimes very frightening, they cut through mountains, they have huge cataracts, and they eventually arrive at the sea. Well this is broadly speaking the progression of the piece, creating this huge sense of volume and magnificence from totally microscopic and trivial grains.

Iwatake: So in your opinion, there is a reason that it didn't happen before 1986?

Truax: There's a very similar change of mindset happening now in non-linear dynamics in physics in so-called chaos theory. Why did dynamic systems, such as the pendulum, go for 300 years assuming linear predictability, and they ignored anything that was non-linear, because they couldn't deal with it. Fourier analysis and re-synthesis is a linear model. And now suddenly we realize that instead of thinking that the world is fundamentally linear and there are these troublesome little pockets of non-linearity, we now realize in physics, that the world is fundamentally non-linear and any regularity is the exception. So my recent work during my sabbatical has tried to combine chaos theory with granular synthesis.

In the last few years I've been able to extend granular synthesis to deal with sampled sound. At first they were very short samples, because of technical limitations, 150 milliseconds. But I was able to magnify, in a sense, very short phonemes. The first came from the voice and this piece of mine, The Wings of Nike, just deals with two phoneme sequences, a male and a female one, "tuh" and "ah", very very short, but there's 12 minutes of music that come out of it. And that amount of magnification is achieved by going into the micro level of the timbre.

And then the final breakthrough that I'm very pleased about is that in 1990, I was finally able to get a very high quality A to D converter connected to our machine and I can now deal with full scale environmental sounds. And this is where the two things finally come together, my interest in the soundscape and the interest in computer music. It's a wonderful breakthrough, and it erupted, literally erupted, in the first few months of 1990, into a major composition, 36 minutes long, called Pacific, that is entirely based on the granulation of environmental materials from the Pacific region - ocean waves, boat horns and seagulls in Vancouver harbour, and the final movement, which was the real inspiration for the piece, the Chinese dragon dance from the Chinese New Year celebration.

Iwatake: How do you deal with these sounds?

Truax: The technique that I have developed stretches the sounds in time without changing pitch. Because you're dealing with small units of sound, you can repeat or magnify instants and slowly move through the sound because you're dealing at the micro level of the grains. The grains are enveloped so there's no clicks or transients, so the sound is just smoothly stretched, and that allows you to go inside the sound. For instance, with the ocean waves, we normally think of them as noisy sounds, but inside of them there are formant regions, momentary formants, as in vocal-type sounds, so out of those waves come voices and other types of sounds, and these transformations suggest metaphors for human experience, in this case birth ... So that's where I am today, dealing with environmental sounds full-scale, time stretching them with this kind of granulation and working with their inherent imagery. I think one key thing about why we use computers is that it allows us to think differently about sound, and I've shown you some of the ways in which it has done that for me.

Computers & Complexity

The other role of the computer is very general, it's how to control complexity. It's the most powerful tool for controlling complexity. What makes the computer indispensable, for thinking differently about sound, is that it allows you to control complex situations and as a result it changes your role as a composer. The role as composer now is not the 19th century idea that you are divinely inspired to dictate every detail, such an egotistical trip, but that's how we still teach composition. We teach our students that abstract is best, but as far as I'm concerned, art is in context, and you deal with sound in context. I've just sketched out the idea of my Pacific Rim piece that's not just abstract sound manipulation, musique concrete, it's not that at all. It's dealing with sound in a cultural and environmental context and the key ingredient is that the computer allows you to explore the complexity and control the complexity. As a composer you are guiding the whole process and you're a source of compositional ideas, and that's really changing the whole role that the composer has. So as far as I'm concerned, we've come totally 180 degrees from the instrumental music concept, both in terms of sound, the different model of sound, the different model of structure, and the different model of what it means to be a composer. And that's what I've been able to discover, what has worked for me with computers, and that's what makes me a computer music composer.